1. Introduction

Machine Learning (ML) methods have proven worthy of complementing human analysis. Splunk has an inbuilt ML toolkit that has gained a lot of attraction over the years. This also gave us the idea to test it for Diagnostics. But first, we must ask the question as to why ML methods are necessary. The user performs an action, which is added as an event to the Splunk Database. So, how does ML help the user?

In the beginning, it may not seem like the optimized approach since the time interval between user action and the consequences of performing that action is instantaneous. However, the fact is that it is not always a one-to-one relationship. Sometimes, there could be several other actions accumulated together that could be causing this problem. This relation would be non-linear, and ML methods are optimal for solving such problems.

In my master’s thesis, I implemented a Deep Learning (DL) model and attempted to interpret and explain the model’s behaviour. ML and DL models are termed black-box models, since they do not provide a proper explanation as to how they arrive at the results. Its an aspect that I found interesting and wanted to explore whether it is possible to interpret it for specific use cases. This is one of the reasons why the Splunk ML toolkit is also appealing, since the interface is simple to understand and interpret. Even though its solution-based, there is a certain extent to which we can interpret the model’s results.

The idea is to utilize the Machine Learning (ML) toolkit provided by Splunk and explore methods to implement it for data from Diagnostics. We start with the following use case.

- To identify or predict which user action could be the reason for high memory consumption or decrease in performance

- Datasets used: UI action, JVM statistics

- Actionable insights:

- Reduces cost for the user

- Make sure that the user action is not taking place (or) it’s reduced (or) set a limit

- Advice the developer to investigate why the user action has high consumption

- Advice the developer to allocate more system memory

2. Methods Implemented:

2.1 Random Forest Regressor for predicting tenured space and user action

Random Forest (RF) is an ensemble learning algorithm for classification and regression tasks. It creates a collection of decision trees by randomly selecting subsets of the data and features to train each tree independently. The final prediction is obtained through a voting (classification) or averaging (regression) mechanism, making Random Forest more accurate, robust, and resistant to overfitting than individual decision trees.

Figure 1: ML regressor methods

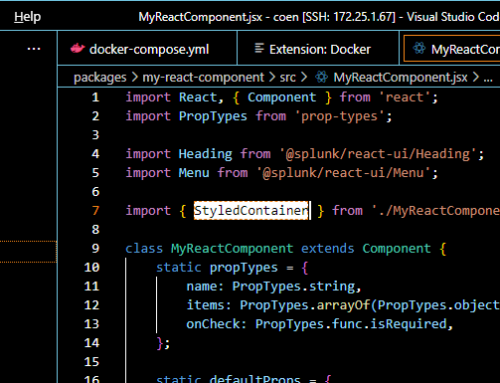

In our case, we make use of the RF Regressor algorithm. To learn more about Random Forest, refer to Splunk’s documentation here. In the Splunk ML toolkit, we initially standardise the input features and target of the JVM statistics table using the Standard Scalar algorithm. The query is given as follows in Figure 2:

Figure 2: Query to relate JVM and user action

Explanation of the query:

- We use a multi-search to join the user action and JVM statistics dataset.

- A span of 5 minutes is chosen to relate all the user actions that occur within that interval with the memory used and recorded.

- A new variable, ‘label’, is created where ui_value, user_action, handler_class and message are concatenated to provide a unique reference to the activity performed by the user.

- Xyseries is used to pivot the user action values to act as dummy columns (Dummy columns have binary values with the user action value as the column name. For each record, the value is True if the user action was implemented, False if not implemented)

- The fit command is used for normalising the data.

2.1.1 Results

Setting the target to be tenured_space_used_wmax, we train two models, one with just the dummy columns of the user actions and the other including the attributes from JVM statistics.

Figure 3: True vs. Predicted for tenured_space_used_wmax (with user values)

Figure 4: Feature importance of model having only user values (dummy columns)

It is seen that there is no relation detected by the model between the user actions and the tenured space used, as seen in Figure 3 and Figure 4. The accuracy is 24% with the test data.

Figure 5: True vs. Predicted for tenured_space_used_wmax (with JVM statistics)

Figure 6: Feature importance of model having user values (dummy columns) and JVM statistics

On the other hand, we can conclude that the model has a better relationship with the attributes of the JVM statistics when predicting the tenured space used. Feature importance level and accuracy are decent when including these attributes as well (Refer to Figure 5 and Figure 6). There is an accuracy of 87.63% when validated with the test data.

2.2 Clustering based on JVM statistics and allocating user actions per cluster

The results we got in Section 2.1.1 show a good correlation between the JVM statistics attributes. The list of attributes is as follows:

- classes_loaded_currently

- classes_loaded_total

- compilation_time_total

- gc_minor_count_total

- gc_minor_time_total

- gc_full_time _total

- vm_uptime

- tenured_space_used_wmax

Due to the good correlation, there could be a possibility that separate clusters are formed pertaining to these features. K-means Clustering is performed using these features to find out the relationship. To learn more about K-means clustering, refer to the documentation here. This will also help us determine which user actions are prevalent within each cluster. Further, we can apply the clustering model to the live data to determine whether the user activity falls under one category. The idea is to categorise the user actions so that specific actions could result in higher memory usage while others require less memory. Figure 7 shows the parameters used for K-Means.

2.2.1 Results

Figure 8 shows the clusters that are formed when running the model. We see a scatter matrix of the data points and how they form a group in the feature space between two attributes.

For example, Figure 9 shows a scatter plot between two attributes (tenured_space_used_wmax and compilation_time_total). Three distinct clusters are formed for these two features (Cluster 0 – orange, Cluster 1 – blue, Cluster 2 – Brown).

Figure 9: Scatter plot between Tenured_space_used_wmax and compilation_time_total

The limitation we face is figuring out what each cluster represents. In this case, Cluster 2 has data points with higher tenured_space_used_wmax. But it doesn’t necessarily mean that all data points in this cluster have high-tenured space values. But this grants us a starting point to explore other methods and techniques that could provide an answer.

After Clustering, we aggregate the count of each label in a cluster to find out which user action is prioritised in each cluster. Figure 10 shows that Cluster 0 has tab_box:tab_changed() with the highest count, followed by image_toggle_item and sw_message_dialog: complete(). This varies for each cluster, but from the overall count, we can list the user actions with the highest number of occurrences.

Figure 10: User action (label) count within each cluster

Below, we sort the top 5 user actions per cluster.

For Cluster 0:

| label | Cluster_0 |

| Tab Label:tab_changed:tab_box:tab_changed() | 1993 |

| Select Mode:image_toggle_item:radio_group: | 907 |

| OK:complete:sw_message_dialog:complete() | 626 |

| Tab Label:tab_changed:object_control_framework:choose_tab_box_page() | 462 |

| Object Control:tab_selected:tab_box:tab_selected() | 437 |

For Cluster 1:

| label | Cluster_1 |

| Select Mode:image_toggle_item:radio_group: | 1215 |

| Tab Label:tab_changed:tab_box:tab_changed() | 1087 |

| OK:complete:sw_message_dialog:complete() | 886 |

| Yes:complete:sw_message_dialog:complete() | 852 |

| Select Value:sub_dialog:in_place_choice_field_editor:activate_sub_dialog() | 780 |

For Cluster 2:

| label | Cluster_2 |

| Select Mode:image_toggle_item:radio_group: | 408 |

| Yes:complete:sw_message_dialog:complete() | 316 |

| OK:complete:sw_message_dialog:complete() | 296 |

| Clear Trail:clear:dm_map_trail:clear() | 250 |

| Trail Mode:image_toggle_item:radio_group: | 231 |

For Cluster 3:

| label | Cluster_3 |

| OK:complete:sw_message_dialog:complete() | 12 |

| Yes:complete:sw_message_dialog:complete() | 5 |

| Tab Label:tab_changed:tab_box:tab_changed() | 4 |

| Object Control:tab_selected:tab_box:tab_selected() | 2 |

| Close Design:deactivate_scheme:dm_common_actions_plugin:deactivate_design() | 2 |

For Cluster 4:

| label | Cluster_4 |

| OK:complete:sw_message_dialog:complete() | 55 |

| Yes:complete:sw_message_dialog:complete() | 45 |

| Clear Trail:clear:dm_map_trail:clear() | 35 |

| Previous View:previous:history_manager: previous() | 35 |

| Splice Bundle-VhubComp:user!splice_bundle_area_vhubcomp:user!pni_fiber_app_plugin:user!splice_bundle_area_vhubcomp() | 32 |

Though the order varies, some user actions (tab_box:tab_changed, image_toggle_item and sw_message_dialog: complete) are seen multiple times in the top 10 for all the clusters.

2.3 Time series plots from the Clustering

Now, we look at the time series for each session to see if there is any pattern to the cluster formation. The session id with the highest count (1632) is taken. Figure 11 shows the time series of one session for each attribute from JVM statistics. Apart from tenured_space_used_wmax, we notice a linear relationship between the clusters and the other features. The data points in Cluster 1 have low values, while Cluster 3 has high values. Cluster 2 is formed in the middle. This indicates that with more session usage over time, the data points fall into cluster 3, which has high values.

This shows a pattern in which the clusters are being formed. This also provides an approach to implement it for incoming data. If a data point falls into a cluster with high memory usage, an alert could be issued notifying the user that further use could result in low performance. It must be noted that it is optional to heed the warning. The model could also provide incorrect results sometimes since it is not 100% accurate. Since Clustering is an unsupervised approach, there is no proper way to validate the model. Further experimentation with a more significant subset of data is required to build a better model.

Figure 11: Time series plot for JVM attributes

3. Conclusion and Recommendations

To summarise, we took one of the use cases and implemented a few ML approaches. In a way, the results are inconclusive as no clear, actionable insight could be applied to the results. One way of improving the outcomes is by further looking at the behaviour of the time series and distinctly setting a category for each cluster.

Even though there is a pattern formed with the time series of each session, this does not necessarily provide a straightforward solution to our problem. Future research could look at other datasets to complement the user action apart from JVM statistics. Also, there could be processes running in the background of the server, which could be a reason for the poor performance. Looking at other datasets might help in this case.

The performance indicator for each user action shows the duration it takes to complete the activity. This could also be considered and added as one of the input variables.

Arun Venugopal

Software Developer