A few years ago, while I was working as a software consultant with an energy company in the Netherlands, we suddenly encountered major issues with our infrastructure. One by one, our databases ‘disappeared’ and our GIS service went offline. When I called the system administrator, I was told to take a better look at the software. After all, the light on the server was green – and in his opinion, that meant the server was fine. I was convinced the hard disks were failing, but I had no idea how to prove it.

These days, everybody is convinced that software monitoring is a good idea. But how deep does that monitoring really go? We virtualized our servers with every type of monitoring imaginable. We also monitored our huge SharePoint and Exchange installations. Yet we still kept our (vitally important) Asset Management System running in a cloudy environment.

In situations like these, the limits of conventional software monitoring can easily lead to unintended consequences. We often see our clients’ application developers create custom logging solutions that automatically send logs on batch processes and user applications to rather uncomfortable destinations. I once saw an otherwise intelligent colleague build a script that would scan logs for specific phrases and send him an e-mail with the results. An ingenious solution, to be sure – but an altogether too fragmented approach to safeguarding the wellbeing of an essential asset management tool.

The value of a comprehensive approach

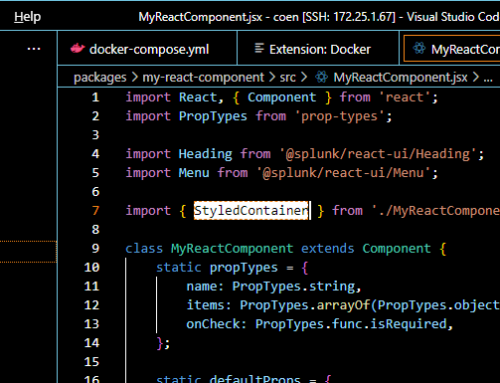

Our search for a more holistic approach to our monitoring challenges led us to a tool called Splunk®. This so-called log analyzer is capable of presenting users with a clear overview of all logs that servers and server applications produce. After examining the tool, we began to wonder: what if we generated a log and ran it through the tool. What useful information would that bring us?

We quickly discovered that our current log files were not suitable for automatic processing. For starters, they contained many useless lines of information. Then there were lines that seemed quite relevant, but did not contain the essential details needed to spot the problems we wanted to solve. This particular variety of automatic processing seemed to be a new approach, one that did not mesh well with the current logs. As a result, we realized we also needed to improve the logs themselves.

But where to begin? What do you need in order for logs to be useful? Obviously, they need to be complete, which means all events need to be logged. This gives user much-needed insight into the changes in their numbers. Then, there’s the matter of details: logs need to provide sufficient detail to help users get a grip on the issue they are investigating or monitoring. And finally, logs need to be accurate. Accuracy ensures you’ll be able to draw the right conclusions from your measurements.

This cannot be achieved by simply adding a print statement to the software. To create useful logs that are well-suited to automatic processing, you need to take a comprehensive approach.

Building a better mousetrap

Once you have access to logs that are complete, detailed and accurate, you will be able to track anything your users do: every click, every database query performed by the application, every map render action the GIS performs. And you’ll be able to count on accurate measurements throughout.

Realworld Software Products B.V. built Diagnostics on top of Splunk® using standard dashboards that present logged information in a way that is both complete and accurate. This immediately raised new questions: exactly which information should the tool present to the person doing the monitoring, and how should that information be presented?

To tackle these questions, we are currently defining KPIs for the users of the monitoring system. And that’s something we could really use your help with. We need input from users to determine the optimal representation of monitoring information – your input.

Defining the right KPIs for the job

To be effective, KPIs need to be SMART. The measurement should have a Specific purpose for your business. It should be Measurable, in that it genuinely has a value. The norms it defines must be Achievable and the improvement it proposes must be Relevant to your success. Last but certainly not least, the KPI must be Time-sensitive, with values or outcomes shown for a predefined, relevant period of time.

Of course, managers who are responsible for Asset Management have different KPIs than System Administrators, who in turn have different KPIs than the Functional Maintainers that are responsible for training users.

Now that we’ve tackled the challenge of logging information comprehensively, defining these KPIs and envisioning the right dashboards to present the current and overtime development of those KPIs has become our primary challenge.

Which brings us back to my earlier example: the system administrator’s green light. With Diagnostics, we now have an asset management monitoring tool that can clearly pinpoint whether the light is truly green. That means there can be no doubt whether a defined KPI is actually met. Combined with administrative monitoring, which is also supported by Splunk®, this reveals the true status of our Asset Management Software.